(Non-)Incident Reports: reducing failures by understanding successes

More better is better than less worse

Some thoughts on lessons from safety management for software people. Hey come on, wake up — I haven’t lost you already, have I?!

Sidney Dekker’s work has been famous in software engineering circles for some time, thanks to constant references from the field of resilience engineering. He surfaced on my radar again when CommonCog’s newsletter referenced Why Do Things Go Right. It almost immediately occurred to me that many of the ideas here apply quite well to the software world as well.

As I thought about it, I did feel that for all the high-profile failures, most software actually does get shipped. For all the high-profile incidents, most software runs pretty well day in and day out. Features launch, bugs get fixed, and users are happy (ok, perhaps we have a mixed record on that last one). So why do most projects succeed — or, at the very least, why do most projects not fail? Can we do more of the things that make them not fail?

Hurtful as it is to our ego, we are not all above average. So skills alone don’t explain it. The article's admittedly unscientific study identifies several factors that were more prevalent when things “went right” than when things “went wrong”. Its an interesting and somewhat intuitive list. Summarizing, and adding examples from the software world:

Freedom to dissent — Successful teams have a culture of healthy debate where product, project, and technical choices can be challenged. There is no groupthink, and people don’t stay silent when they see potential issues. The environment provides psychological safety.

Active risk management — Teams are actively aware of and actively manage things that could get in the way of getting things done. Often, many of the “typical” ways of working are just years of industry experience in active risk management translated into activities and actions. Standups facilitate communication. Code reviews push against the formation of knowledge silos and programmer blind spots, and facilitate continuous learning. Teams test their software and automate build and deploy to reduce or eliminate process variance and reduce the risk to the organization from specialized knowledge.

Deference to expertise — Teams know who to defer to for a given issue. Rather than rely on HIPPO or BOGSAT (look ’em up if you haven’t heard about them and want a chuckle), they seek out the person with the right knowledge for the problem at hand. But they don’t defer blindly, see #1 and #2. One little caveat, ownership and responsibility is still a huge factor in who makes the ultimate decision, i.e., successful orgs tend to ultimately defer to the responsible party even if they are not the domain expert, while fully expecting the responsible party to seek input from the domain expert.

Ability to say stop — This is a nice adjunct to #3 in that this empowers team members to practice “if you see something, say something”, even if they are not the ones with the most expertise. I personally see this as just a special case of #1. See also: andon cords.

Broken down barriers between departments and hierarchies — Collaboration happens across disciplines and levels as needed. In a nutshell, silos reduce the likelihood of success.

Don’t wait for audits and inspections to improve — duh!

Pride of workmanship — One of my colleagues had a nice spin on this: a successful team has swagger. A lot of what people say about the allure of open source work for developers is embedded in this idea of challenging ourselves to be proud enough of our work that we are willing to share it with the world.

All of these are attributes of a culture and, as such, they are leading indicators (and definitively not metrics) of good performance. Dekker highlights both the importance of avoiding over-reliance on lagging indicators and — implicitly — of focusing on qualitative factors that aren’t even possible to metricate (although surveys are sometimes used as a loose proxy).

Let’s take a closer look at a couple of key quotes from Dekker’s piece.

“Safety is not about the absence of negatives; it is about the presence of capacities.”

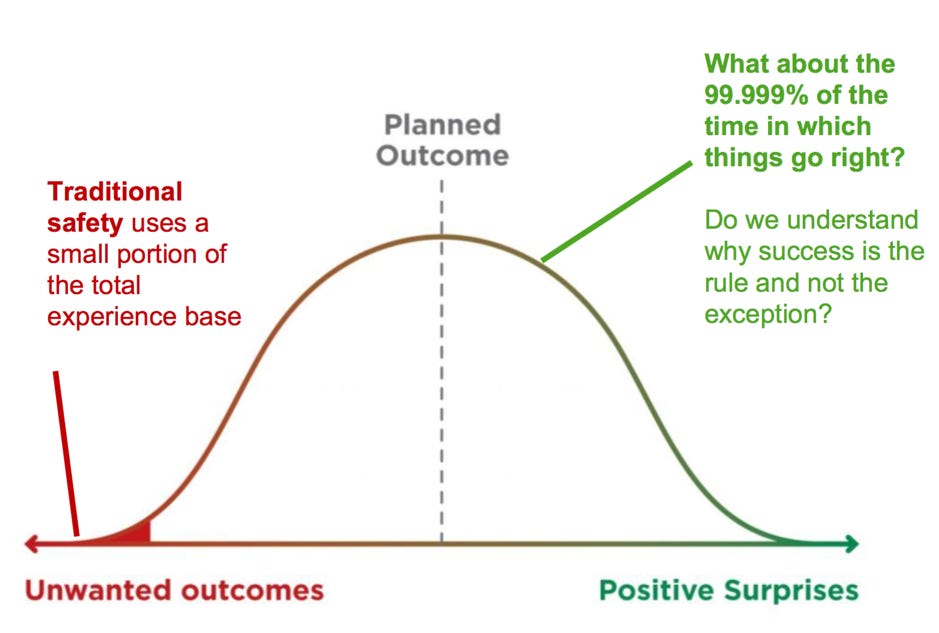

This is the core insight, of course, encouraging us to fight our instinct to focus on mistakes and failures. By developing the capacities that lead to successes, we can reduce the likelihood of unwanted outcomes by increasing the likelihood of the planned outcomes and even positive surprises.

In between, the huge bulbous middle of the figure, sits the quotidian or daily creation of success. This is where good outcomes are made, despite the organizational, operational and financial obstacles, despite the rules, the bureaucracy, the common frustrations and obstacles.

Implicit in this quote is the following: the typical way in which we identify problems in a system is by studying the cause of failures and then trying to address those causes. These are the nuts-and-bolts of things like retrospectives and incident management (much as the theory of retrospectives encourages as much focus on the positive as the negative). But rather than focusing exclusively on improving the things that caused failures, Dekker suggests we try to reinforce the things that caused successes.

In that sort of logic, we’ve got great systems and solid procedures—it’s just those people who are unreliable or non-compliant.

This is a quick nod to the idea that a focus on systems and procedures will likely yield better results than a focus on compliance.

Check back with me in a few months. I’m planning to apply these ideas and we’ll see, maybe I’ll have learned something worth sharing!

Sidebar 1

This concept does have a loose parallel in professional development — better to be awesome at something than bad at nothing. Develop your skills more, and don’t worry about your weaknesses unless they are actively getting in way of your goals.

Sidebar 2

The first time I remember feeling like I was reading Big Books was when my dad couldn’t stop telling me about the brilliance of the insight “catch people doing right” from the very first edition of The One Minute Manager. The phrase has been stuck in my head ever since and it remains a management principle to which I try to live up. An amazing and brilliant person (ha ha) I know once wrote this:

“Policy is culture made concrete” — a policy of regularly and frequently calling out things that people are doing right is an important tool in building and reinforcing a culture of more right, more of the time.

Sidebar 3

There’s a big overlap between Dekker’s list and the definition of a Generative Culture from Westrum’s organizational typology, popularized in software engineering circles by one of our Religious Tomes™, Accelerate. Here’s a rough take, with Dekker’s list on the left and a generative culture’s typological factors.